Denounce, deny, deceive

The manipulation playbook of anti-science actors

Background:

“The only thing the COVID vaccinations stop is the heart”

… outrageous lies catch eyeballs. That is what they are designed to do. While sometimes shocking in their maliciousness, it is no secret that the internet is rife with many such anti-science falsehoods.

Quacks, fake experts, influencers, political commentators, trolls, gurus, charlatans, contrarians, pundits and other media manipulators currently dominate the public discourse on a range of medical and scientific topics. Manipulators build small digital fiefdoms where the most outrageous or popular, not the most correct, get to shape the particular flavor of alternative reality. Manipulators also reap in windfall profits from leveraging algorithmically created echo chambers and filter bubbles.

From anti-vaxxers to lableak truthers, from climate contrarians to AIDS deniers, supplement grifters, and alternative medicine charlatans, each parallel universe comes with its little niche rituals, idiosyncratic fallacies, and historical trajectory. They are all part of the current fragmentation of reality perceptions and epistemic crisis plaguing society.

However, what all these fragmented groups have in common is a shared animosity towards “mainstream” science (read: evidence-based science that happens to go against their particular belief or worldview). This is no accident. To sustain their often mighty profits or influence, most media manipulators need to subvert science, or at least the public’s understanding of it.

Yet science is held in high regard by a majority of the population, and directly attacking it is unpopular at best. Nobody comes out advocating openly to become a science denier, nor would they accept such a label. So how do media manipulators go about discrediting inconvenient scientific knowledge? And why are their alternative explanations so appealing to us?

In this article, I have curated some common tactics anti-science actors use to manipulate discourse, sow doubt, foster distrust in institutions, and attack any unwelcome scientific finding, be it about climate change, vaccination, evidence-based medicine, public health interventions, or one of my pet peeves, the origins of SARS-CoV-2.

Let’s see how many you have encountered before.

The archetypes

Identifying the ‘Merchants of Doubt’ in public discourse

“The discourse holds so many traps for the unwary. It’s a bit like financial scams and gambling that are a tax on the financially naïve. A similar tax is being levied on the intellectually naïve.” — Prof. Matthew Browne from Decoding the Gurus podcast (personal communication)

1) The “just-asking” Questioners

Questions, just like statements, can trigger all sorts of cognitive biases.

In any discourse, questions act as a collection of possibilities with attached social expectations, such as that the “question set” in the conversation is something everyone is committed to answering. (Portner, P. Semantics, 2004)

This social feature opens the door for bad actors to covertly shape the conversation through the tactical use of illegitimate, dishonest, misleading, or manipulative questions. (Related tactic: Sealioning)

Psychologically, the ‘just asking question’ and ‘sealioning’ tactics work by exhausting the opponent and abusing the audience’s susceptibility to “framing effects”. Framing influences subconscious decision-making based on supplying positive or negative connotations. (Tversky & Kahnemann, Science, 1981 & Keren, G. Perspectives on framing. Psychology Press, 2011)

The sneaky part is that manipulative framing effects even persist when people are asked to think deeper about the problem or justify their reasoning. (LeBoeuf et al., Behavioral Decision Making, 2003)

In theory, questions are there to help us solicit information we don’t know

In reality, though, what we ask has an impact on how a conversation goes about. Good questions lead us down the right path, towards the truth or some other goal we have (The decision lab, 2021).

Bad questions lead us astray, and malicious actors can weaponize them to shape a conversation in a way that only benefits them. — The decision lab, 2021

When confronted, however, manipulators simply resort to saying that they are just “asking questions” because they want to find out the truth. (They usually lament how “people can’t just ask questions anymore.”)

Almost without exception, people “just asking questions” have made up their minds. They just do not want to say the quiet part out loud.

A quick “just asking questions” case study:

Public discussions around the scientific evidence for a zoonotic origin of Covid get intentionally derailed by constant repetition of variations of the following questions:

1) How can scientists discard a lableak without any investigation?

2) How do we know that Chinese scientists aren’t lying about everything?

3) Why would anybody be against getting to the bottom of this?

Why do these questions work? For starters, notice that all three frame things in a very negative light. They paint the picture that we have no idea what is going on, that we are completely in the dark scientifically, or worse, that we have been lied to by both scientists and untrustworthy adversaries, that something untoward has happened and is being covered up.

This, of course, is largely misrepresenting reality and ignores that:

there is a large body of scientific evidence pointing towards zoonosis created by hundreds of scientists which makes any research-related origin scientifically implausible (albeit not impossible, hard to prove something did not happen). This is an emerging consensus among domain experts (Holmes et al., Cell, 2021, Jiang & Wang, Science, 2022, Keusch et al, PNAS, 2022, Goodrum F et al., Virology, 2023)

there were multiple occasions where a team of independent investigators went to ask questions; first a ‘rapid response’ by the WHO-China joint mission involving independent scientists from the international community in early 2020, same for another specific WHO origins investigation in 2021 assessing any lableak scenario as “extremely unlikely”, a US intelligence agencies investigation, as well as a currently ongoing SAGO mission which keeps tabs on the body of origin literature. All failed to turn up any evidence of a lab leak but plenty of evidence of zoonotic spillover.

Trust has been an issue throughout. However, many critical findings by Chinese scientists have been independently corroborated, were undertaken with global collaborators, and are consistent with published literature

More maliciously (and manipulatively), the above questions also mislead audiences by creating an implicit false dichotomy: Either you want (yet another) investigation, or you must be against getting to the bottom of this issue.

This ignores the possibility that another investigation into the lableak theory might actually not be required to clarify this issue scientifically (see consensus above)

It obfuscates the political costs that unreasonable grandstanding will bring. For example, focusing resources and political capital on improbable lab-based routes might prevent other, more pertinent avenues (e.g wildlife routes, farms, smugglers) from being explored instead

It pays no attention to the reality of Chinese geopolitics and also the corollaries of implicitly blaming Chinese scientists. Baseless blame sows more distrust and sabotages international scientific collaboration and data sharing on multiple levels for present and future pandemic prevention efforts

The false dichotomy also ignores the fact that it is highly unlikely that any post-mortem investigation of Chinese labs 3 years after the outbreak can even find anything relevant

So there are good reasons to be skeptical about the cost:benefit of unenforcible and empty demands for another investigation.

No scientist will ever object to getting more data, the question is how to go about it.

Depolarization and collaboration might be a more fruitful strategy after years of ineffective blame games, political grandstanding, and stagnation. The proper framing should be: Do you want more of the same, or try something other than grandstanding?

Finally, and perhaps most importantly, the above questions are not asked in earnest but repeated constantly to distract from the scientific evidence we do have, and are fundamentally incurious about the answers given.

That’s the point. Many manipulators use these questions to try to deny or de-legitimize any scientific consensus on the origin issue because it serves their financial, social, or political interests (as the witch hunts in US congress will show this year, but that’s another story).

Alright, let’s move on.

2) The compelling Storytellers

It would seem that mythological worlds would have been built up only to be shattered again, and that new worlds were built from the fragments. — Lévi-Strauss

We humans are a story-telling species. Stories help us navigate life by reducing the complexity of the natural and social world and maintaining social bonds or group-level cooperation. Storytelling represents a key element in the creation and propagation of culture, it is how we make sense of non-routine, uncertain, or novel situations (Currie & Sterelny, 2017, Bietti et al., Topics in Cog Sci., 2019)

Sensationalist, intriguing, emotional, or outrageous stories can shape public conversations, even when they are baseless, speculative, or plainly false. This is because our judgment of truth draws mostly on personal experience, memory, and feelings, not analytical processing (Brashier & Marsch, Annual Review of Psychology, 2020).

Stories as sense-making devices are full of peripheral cues such as familiarity, processing fluency, and cohesion which create an “illusory truth effect” (Wang et al., J. Cognit. Neurosci. 2016 & Unkelback et al., Cognition, 2017)

For a very long time, this power of storytelling has been used by leaders, powerholders, and manipulators alike to further their goals.

Storytelling can be seen as a transaction in which the benefit to the listener is information about his or her environment, and the benefit to the storyteller is the elicitation of behavior from the listener that serves the former’s interests — Sugiyama MS, Human Nature, 1996

In our current broken information ecosystems, media manipulators are interested in creating and selling engaging stories for their bottom line, and this helps the spread of sensationalist news, juicy gossip, outrageous lies, and conspiracy myths.

Even worse, science suffers a dramatic disadvantage when it comes to storytelling because it is bound by fact. It is also often nuanced, jargoned, and hard to explain to non-experts. Not a good combo for an asymmetric social media landscape that prefers quick, short, overconfident, and eyeballs-catching content. Content that tells us what we want to hear, not what is necessarily accurate or true.

Facts can not be optimized for audience engagement, but fictions can.

A compelling fiction spreads way better on social media than a frustrating truth.

A case study:

What to make of the “Ivermectin against Covid” debacle, or for some “the cure they don’t want you to know about”? Miracle cures are an idea almost as old as the spoken word, and stories about them hold the promise to fulfill many psychological needs that science can hardly compete with.

Let’s do a quick narrative comparison:

(Truth) Scientific studies show with high confidence that Ivermectin has no plausible mechanism against Covid-19 and no efficacy when it comes to Covid-19 prevention, disease trajectory, or death rate.

(Fiction) Ivermectin is a safe and effective miracle cure against Covid-19 and pharmaceutical companies try to throttle its use to maintain their profits (or other secret agendas like pushing vaccines)

Why is the (true) narrative a much worse story than the wishful falsehood?

We already mentioned that stories are how we make sense of our world and environment. Because of the high uncertainty stemming from the pandemic, together with real experiences of often arbitrary death, illness, and recovery, we all were put into a position of powerlessness.

In that position, when science has few or no easy solutions on offer (yet), many people might turn to alternative sources for information to ‘figure this thing out’. Anecdotes of vitamins, supplements, and rituals that helped against Covid started filling up the internet. Maybe there is hope? In this position, taking a supposed ‘miracle’ cure and doing something is psychologically way more salient than doing nothing, it gives people a feeling of control back.

Among the anecdotes comes a very powerful one: Ivermectin, an old drug that is super safe, cheap, and widely used, shows some efficacy against Covid in cell culture experiments. Also, some epidemiological studies in developing countries seemingly find a health signal with Ivermectin. Multiple clinical trials are announced all over the world. Albeit the biological mechanism of Ivermectin is understood and has nothing to do with anti-viral mechanisms, it is brought in connection with some viral proteins with shaky in silico binding studies. This, together with poorly performed and (in a few cases) fraudulent small clinical trials eventually create enough buzz and superficial plausibility for the Ivermectin story to break through and become mainstream.

This mainstream attention brings Ivermectin in conflict with science and public health authorities, who rightfully have to caution people, warn about side effects and false promises, and with it, shoot down hopes of regaining control, of salvation.

Many people can not stomach hearing it. And this is where the storytellers come in to fill that gap. Maybe there is a sinister reason why the ‘authorities’ would be against the miracle cure? Don’t we all know corruption between “big pharma” and the government is a thing? Did you see the horse-paste smear campaigns against Ivermectin from the government? I know so many people that took Ivermectin and never got Covid! Did you know Ivermectin has no patent protection anymore? Pharma can not make money with it, that’s why they don’t want it. Wasn’t Pfizer working on an Ivermectin clone [no!] to sell us for high prizes so they turn a profit? Also, they hate Ivermectin because it would cut into their vaccine profits. Isn’t Ivermectin a safe alternative to the supposedly dangerous vaccines? Why are “they” preventing doctors from prescribing it and telling the truth?… yadda yadda yadda you know the spiel:

I think it comes down to a fear of the disease, as well as distrust of the medical profession, with magical thinking sprinkled on top. — David Gorski, Science-Based Medicine

Stories bind people together, they create shared cultures. Believing in certain miracle cures may become a type of “social glue” that connects people who hold similar beliefs to one another. That is the power of story. Evidence be dammed.

Even I have acquaintances who took Ivermectin when they got Covid. While they both were vaccinated and young, they believed that actually, the Ivermectin they took was the reason why they only had mild disease. (I had mild disease without any Ivermectin or other supplements, just vaccination btw)

Personal experiences or exceptional anecdotes of surprising recovery or unexpected death always make for good stories.

This brings us to the next point:

3) The home-sleuthing Amateurs

Our connected information age has made sleuthing on the internet both easy and ubiquitous. No matter what popular topic, there is always a set of sleuths willing to invest inordinate amounts of time to “do their own research” and uncover nuggets of information that they perceive as new, odd, surprising, counterintuitive, or confusing.

Within this sleuthing, “Anomaly hunting” is a social practice that seeks to find unexplained phenomena or coincidences that seem at odds with official narratives on a topic. Anomaly hunting has been traditionally associated with paranormal beliefs (Prieke et al., Thinking & Reasoning, 2020), yet some behavioral psychologists have argued that it is also a manifestation of how conspiracy theorists structure their worldviews. (Wood & Douglas, Frontiers in Psychology, 2013)

They imagine that if they can find (broadly defined) anomalies in that data that would point to another phenomenon at work. They then commit a pair of logical fallacies. First, they confuse unexplained with unexplainable. This leads them to prematurely declare something a true anomaly, without first exhaustively trying to explain it with conventional means. Second they use the argument from ignorance, saying that because we cannot explain an anomaly that means their specific pet theory must be true. I don’t know what that fuzzy object in the sky is — therefore it is an alien spacecraft (Novella, 2009).

Conspiracism is rooted in several higher-order beliefs such as an abiding mistrust of authority, the conviction that nothing is quite as it seems, and the belief that most of what we are told is a lie. Conspiracy theorists seek and prize anomalies that seem to conflict with the official version of events (Keeley B., The journal of Philosophy, 1999)

Conspiracy theorists reject standard sources of information about the events which interest them, and have exaggerated trust both in their own perceptiveness, and in anomalous pieces of evidence — (Hawley K, Philos Stud, 2019)

Pair this overconfidence in their own abilities with a tendency to display hypersensitive agency detection (Douglas et al., 2016) and increased illusory pattern perception (van Prooijen et al., 2018), and it becomes quite clear why “anomaly hunting” has become a widespread social activity in conspiratorial circles.

On top of that, conspiratorial thinkers tend to show poor analytical thinking skills (Swami et al., 2014) and have a tendency to jump to conclusions (Pytlik, 2020).

What is the impact of anomaly hunting on the discourse?

Anomalies, fake or real, are powerful tools for manipulators because they play into conspiratorial tendencies.

Unexplained anomalies and coincidences can be used to create doubt about the ‘official’ story, or build fallacious arguments from incredulity to delegitimize mainstream science.

Because humans have a tendency to seek patterns in random signals, manipulators and BS artists can abuse select anomalies to create an illusion of meaningfulness in their audience and bolster their preferred narrative (van Prooijen et al., 2018, Walker et al., 2023), often through committing a post hoc ergo propter hoc fallacy. This fallacy is not new by any means, as the Latin name suggests. It’s human to make these causal connections, even when not there.

In reality, most anomalies are irrelevant or in no causal relation to the topic at hand.

Another impact of anomaly hunting on discourse is that it manipulates people into cognitive biases like the conjunction fallacy, where the attachment of unrelated but co-occurring anomalies to a narrative makes people believe it more likely (Tversky, A., & Kahneman, D., Psychological Review, 1983) or other confirmation biases.

If this sounds complicated, just pay attention to how anti-vaccination activists use perceived anomalous deaths of athletes or kids to sow doubt about vaccine safety and efficacy.

Example: Perceived anomalous deaths

Anomalies often serve as potent attention-grabbing anecdotes that can be built into larger emotionally engaging stories. They are also a stable for “just-asking” questioners and contrarians alike to bring up and distort public discourse.

It is worth paying attention to how so many contrarians end up in the media in the first place:

4) The contrarian “Experts”

Throughout the pandemic, fringe scientists and doctors have dominated public conversations, using their credentials to bolster outright falsehoods — and this has left us more divided, and less informed. — Dr David Robert Grimes in Byline Times

Contrarian “Experts” are everywhere in the media.

This is because audience perception and journalistic norms assume that there are always two sides to an issue. There is a cultural notion of the journalist as a “neutral transmitter” of information, and deviation from that invites charges of bias. Furthermore, journalists themselves feel pressure to demonstrate their “innocence” (Rosen, 2014), that they take no sides, have no stake, ideology, or politics, and no views of their own (Kohl PA. et al., Public Underst Sci, 2016).

Besides journalistic norms, there is a second trend in today’s fast-paced attention economy: The use of neutrality as a mere fig leaf to cover for journalistic incompetence:

[…] Journalists might also use balancing as a “surrogate for validity checks” when they do not have the time or the expertise to determine which side of a controversy is more likely to represent truth. — (Kohl PA. et al., Public Underst Sci, 2016)

Failing to sort genuine topical expertise from media-savvy contrarians outsources the task of assessing the validity of statements to consumers of journalism, who have even less time and are usually less capable to judge the veracity of presented statements. This makes gaming the media’s neutrality bias a common tactic for manipulators on complicated, jargoned, or technical scientific topics.

On top of that, contrarians “validated” by being featured on mainstream media enjoy increased visibility to consensus-representing experts on social media (Petersen AM et al., Nature communications, 2019), which has also to do with attention economy incentives that favor new, sensationalist or outrageous claims over textbook knowledge. But even for serious outlets, the twisted relationship between desired media neutrality, journalistic norms, and lazy incompetence is easily exploited by credentialed contrarians to present their groundless assertions next to well-established scientific truth.

While the above BBC reporter has been tricked, in general, the media’s habit of false balance reporting by equivocating mainstream expert and contrarian opinions has dire consequences for the public’s understanding of any issue, for example making parents believe the evidence for a link of MMR vaccines and autism was substantial as evidence against it (Hilton S., BMC Public Health, 2007), or creating doubt on the consensus about climate change:

Exposure to contrarian views reduced perceived expert consensus, even when paired with a consensus view, and regardless of the expertise of the contrarian source. — (Imundo & Rapp, Journal of Applied Research in Memory and Cognition, 2022

More generally, media manipulators can use any false balance reporting as “validated content” to sow doubt about established knowledge. Taking advantage of the media’s desire to present all views equally (instead of for example based on merit or weight of evidence) can be a Trojan horse helping contrarian “experts” to effectively spread damaging falsehoods (Grimes DR., EMBO Rep., 2019).

Why “Expert” in quotes?

Well, most contrarian “experts” overwhelmingly commentate outside their narrow field of expertise, usually on topics of public interest or relevance, while failing to back up their assertions with science. Contrarians often seek to circumvent traditional processes of scientific inquiry, publishing, and peer review, instead disseminating their ideas directly to journalists or the public.

On social media, they tend to inflate their actual credentials and distract with technical vocabulary, convenient anecdotes, rhetorical tricks, and half-truths to create the appearance of an authoritative source to average consumers of information. They act as manipulative commentators, not scientists or science communicators.

Science is a systematic method of inquiry, that makes testable predictions and updates itself based on the totality of evidence. Scientists by contrast are people, subject to human foibles and biases. Any perceived authority they may have stems not from their qualification, but from accurately reflecting the totality of evidence. If they fail to do so, their credentials, education, and prestige are irrelevant — they are not practicing science. — Dr. David Robert Grimes in Byline Times

The “expert” is in quotes because while genuine medical/scientific experts exist that hold contrarian views based on legitimate domain expertise, 99% of the time what gets featured in the media is just a credentialed commentator for the superficial appearance of balance (and more cynically: for getting profitable headlines).

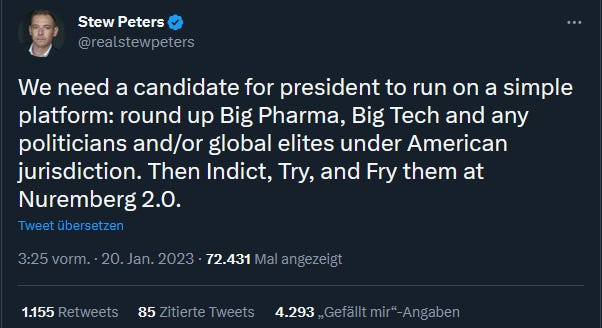

There could of course more be said about the role of media and how their economic incentives for creating conflict (for engagement) play into contrarian’s hands as well. Or how the media class seems addicted to Twitter, a platform built around conflict, sensationalist rumors, and contrarian takes (and that was before a controversial billionaire with autocratic tendencies bought it and removed any type of moderation of medical or scientific misinformation).

Media coverage of scientific topics is just obsessed with highlighting the individual scientists, not the underlying science, and that makes us prone to fall for misinformation coming from great storytellers as well as moral grandstanders.

5) The grandstanding Moralists

If moral outrage is a fire, is the internet like gasoline?

Moral outrage is a powerful emotion with important consequences for society.

People experience moral outrage when they perceive that a moral norm has been violated and express outrage when they believe that it will prevent future violations and this is both amplified and trained by social media (Brady et al., Science, 2021)

[Social media] users conform to the expressive norms of their social network, expressing more outrage when they are embedded in ideologically extreme networks where outrage expressions are more widespread, even accounting for their personal ideology — Brady et al., Science, 2021

Performative moral outrage expression is particularly noticeable in a practice called moral grandstanding, the use of public moral discourse for self-promotion (related: virtue signaling).

Moral grandstanding is associated with status-seeking and conflict, not truth-finding (Grubbs et al., Plos One, 2019). This does not mean grandstanding is necessarily fake. Most dangerous consequences of grandstanding arise because grandstanders sincerely believe the things they say, thereby aiming to reduce free expression within the desired in-group, punishing dissent, and making deviation from shared beliefs socially costly (Tosi & Warmke, Cambridge University Press, 2021).

Grandstanders want others to be impressed with their moral qualities — that is, the purity of their moral beliefs, their level of commitment to justice, their skill at discovering moral insights, and so on. — Tosi & Warmke, Cambridge University Press, 2021

Sometimes, moralizing discourse and grandstanding can devolve into societal overreactions and moral panics.

Moral panics are emotionally driven reactions to societal crises in which risks are communicated using moralising discourse, generated in and through media representations of risk and harm. — (Skog & Lundstrom, Social Science & Medicine, 2022)

Especially during pandemics, moral panics tend to flourish (Gilman, The Lancet, 2010) and may sometimes constitute legitimate responses to ‘real’ threats such as pandemics or climate change (Rohloff, Sociology, 2011). Moral panics are characterized by highlighting incurable flaws in certain groups and by interventions in which a majority disciplines the minority into obedience (Skog & Lundstrom, Social Science & Medicine, 2022), thereby often going hand in hand with activism for social or structural change.

Because moral grandstanding is polarizing, tribal, and ubiquitous on social networks, media manipulators can abuse our susceptibility to moral outrage and moral panics to shape or sabotage public discourse.

For example, moral panics have been used by manipulators to create or deepen both vertical (e.g ‘the people’ vs. the ‘establishment/big pharma/experts’) and horizontal divisions (e.g ‘virtuous individuals’ vs. drug addicts, racial minorities) in medicine (Lasco & Curato, Social Science & Medicine, 2018).

Example: Vaccinating children

Lying, fears, and moral panics about childhood vaccinations have of course been a threat to public health for decades, not just since Covid-19. In the end, moral grandstanding and moral panics are effective tools for engagement and manipulation, but they leave the public more divided, less informed, and parents unnecessarily radicalized and fearful.

Talking about radicalization…

6) The toxic Mudslingers

One manipulation tactic common in politics has also made it into public discourse about scientific issues: Mudslinging, also known as negative campaigning, including ad hominem attacks to discredit experts.

In politics, mudslinging pays off when blaming domain problems or reframing the essence of a topic on issues the adversarial party has perceived ownership of (Seeberg, West European Politics, 2020). This means that against scientists and doctors, mudslinging is particularly effective when they get coupled with (perceived or real issues) such as profiteering by pharma companies, corruption in institutions or errors in public health policy, irrespective whether the individual expert is innocent of or even working against these practices and systemic issues.

From inventing conflicts of interests to the pharma-shill gambit, medical and scientific experts face a lot of baseless attacks on their character in attempt to discredit their research, arguments or expertise.

As an online discussion of health, in particular vaccines or alternative medicine, grows longer, the probability of the invocation of the ‘pharma shill gambit’ approaches one. — David Gorski for Science-Based Medicine

While socially constructed, the impact of mudslinging can become a tragic social reality in the form of character assassination of individual scientists (see for example Dr. Fauci or Dr. Tedros) or more general stigma (Smith & Eberly, Journal of Applied Social Theory, 2021). Scapegoating and blame are very popular topics for negative campaigns against experts too because of their moral implications and salience (Rothshild et al., Journal of Personality and Social Psychology, 2012). That is the nature of mudslinging, throwing stuff at the wall to see what sticks.

There is an ancient wartime practice of pouring poison into sources of fresh water before an invading army…

I just want to highlight one particular pernicious strategy to preemptively discredit experts before they get a word in: Poisoning the well

… a special form of strategic attack used by one party in the argumentation stage of a critical discussion to improperly shut down the capability of the other party for putting forward arguments — Walton, SSRN, 2006

A Classic example:

When a woman argues:

it doesn’t make sense to pay a person more for doing the same job just because he is male or Caucasian.

There might be a few ways to respond. Yet instead of addressing the argument, well-poisoners would counter:

Because she is a woman, it’s to her personal advantage to favor equal pay for equal work. After all, she would get an immediate raise if her boss accepted her argument. Therefore, she is biased, not sincere, and her argument is not worth engaging with.

Like all fallacies, it is based on some superficial plausibility enhanced by argumentation that is not only persuasive to an audience and has a rational core, yet is exploited, misdirected, or blown out of proportion in a cleverly misleading way, making it useful as a powerful tactic of deception. — Walton, SSRN, 2006

Poisoning the well, when successful, leaves little recourse for the other party to respond.

Poisoning the well is ubiquitous online and effective to inoculate fragmented audiences against arguments from doctors or scientists. For example:

Because doctors work for the medical establishment, they can not be trusted, especially when it comes to evaluating alternative medicines in competition with ‘establishment doctrines’

Because scientists get funding from institutions, they are forced to ‘trot the government’ line to keep the money stream open and will only do politically sanctioned science

Media manipulators regularly use mudslinging, scapegoating, dehumanization or poisoning the well strategies to discredit inconvenient experts or institutions.

The real impact of these tactics comes however from social networks, as these strategies create online mob dynamics so toxic and dangerous that experts disengage or get bullied, harassed or threatened out of the public discourse.

It is well-reported that online hate has a chilling effect on any individual and can be weaponized to suppress free speech and silence dissent, a form of digital repression (Earl J. et al., Science Advances, 2022).

While all the other manipulation tactics we covered are to the detriment of science, digital repression is antithetical not only to science but a democratic society.

So where does this leave us? What does exposure to these manipulations do to society?

Conclusion

I believe we have to rethink information ecosystems and public spaces by moving beyond talking about medical or scientific mis/disinformation.

We have to recognize that online platforms and (social) media incentives have fragmented our perception of reality. This has created novel asymmetries and vulnerabilities that bad actors can abuse to hijack public conversations and sabotage scientific discourse, often for personal profits or political goals.

I worry there is a more dangerous meta-effect than harmful misinformation stemming from these endless information battles online:

A general breakdown of any functional signal-to-noise ratio on any scientific topic that contradicts popular beliefs or interferes with the lies of manipulators and powerful interests.

For simplicity, I will call this meta-effect “noise pollution”.

Noise pollution entails that:

content virality, not content value (truth, accuracy, context, reliability) is the primary criteria for information to spread and be seen, and while compelling fictions can be optimized for engagement, facts can not

for most citizens online (and increasingly offline), the majority of popular information they consume comes from untrustworthy sources

citizens experience cognitive overload and confusion, which leads to either disengagement, fallacious processing, or falling back to intuitive priors (gut feelings, not evidence-based decisions)

citizens end up outsourcing opinion formation (when no expert or good info source can be identified) to proxies within their social trust network or tribe

An argument can be made that noise pollution deeply influences our lives on more than just medical or scientific issues:

If we cannot browse reliable information, if we lack domain expertise, time, or energy to deeply engage with the topic, we either give up and fall into inaction, have to follow our uninformed intuitions, or have to rely on our trust networks to navigate modern life. The latter point needs some emphasis because it is a defining feature of our current societal struggles.

Relying solely on trust networks, not expert institutions, scientific consensus, or factual reporting to inform one’s opinions is dangerous in a world of fragmented realities.

Quacks, fake experts, influencers, political commentators, and other media manipulators have a far wider reach than actual trustworthy sources, including institutions. They appeal to our intuition, are skilled to manipulate us into trusting them, or know how to emotionally shape public conversation to achieve their goals. On top of that, having to rely on trust or feelings towards information sources may predispose people to cognitive illusions, where statements that feel good are accepted irrespective of truth. Studies found that people share conspiracy theories they themselves knew to be false because of expected social impact.

It is a mess, and this crowd-sourcing of falsehoods in society just adds up to more noise pollution, and with it, political polarization.

It leads to absurd medico-social phenomena, for example, the association of ineffective Ivermectin prescriptions with political affiliation, or watching a specific cable news network reducing COVID-19 vaccine compliance.

So even beyond the profiteering of media manipulators, noise pollution sabotages collective actions on cooperative problems like climate change and pandemic prevention, or medical topics like vaccination and public health. This also makes noise pollution a powerful weapon to detractors of science and autocrats alike.

Disinformation researchers have long learned that narrative control in the information age works through noise pollution, not censorship.

Many scientists, doctors, and institutions have been slow to pick up on the role of trust in their communications, believing that factual information alone can help persuade society to make reasonable inferences about reality. In the meantime, well-connected and dubiously financed merchants of doubt have invested heavily in sowing doubt about established knowledge and breaking any trust relationship science had with society.

Like Russian propagandists, their gift has been to take things apart, to peel away the layers of the onion until nothing is left but the tears of others and their own cynical laughter. (Quote adapted from Timothy Snyder, foreign affairs, 2022)

The truly devastating part is that historians of science have warned about these tactics for a long time, to little avail in the public consciousness.

As educated public and democratic citizens, we ought to fight tooth and claw against being manipulated and deprived of our own agency by the merchants of doubt and their cynical communication tactics.

As supporters of science, reasonable discourse, and evidence-based decision-making, we need to grow wiser about the current media ecosystems and the actors that shape them.

Pollution is a threat to the public good because it degrades shared natural ecosystems. Noise pollution is no different when it comes to information ecosystems.

Our own behavior is the one thing we can change immediately. There is of course also an argument to be had about more and stricter regulation and moderation on social networks, especially when it comes to amplification, behavior, and participation.

But I will leave that for another day.

Disclaimer:

This article held a bit of a dilemma for me, as it can also be used by bad actors as a guide on how to influence or derail discussions. Whenever situations like this arise, one has to do some cost:benefit analysis of whether the good outweighs the bad. Obviously, since you have read this article, I came down on the benefit side, so I just wanted to share a few considerations about how I reached that judgement:

Ubiquity. These tactics are already widely used, many of them as old as time. My article highlighting why they work is unlikely to dramatically increase their use.

Harm reduction. It is my belief that these tactics mostly work to manipulate when there is a lack of awareness. Creating awareness thus should reduce harm, especially around medical discussions.

Combatting side effects. These tactics create noise pollution, and noise pollution comes with many societal diseases we yet have to wrap our heads around, like epistemic paralysis, nihilism, and conspiratorial thinking. If enough people come around to see the bigger picture, systemic changes to our info spheres are more realistic.

Restore agency. Manipulation robs us of a fundamental human right, the freedom and agency to make our own decisions. There is no democracy when citizens have no agency.

As always, my hope and goals are to educate and equip citizens with conceptual tools and new perspectives to make sense of the world we inhabit.

This article took a lot of time and effort to conceptualize, research, and produce, actually almost irresponsibly so given that I do not monetize my scicomm and certainly do not plan to start with it now.

I see this work as a public good that I send out into the void of the internet in hopes others will get inspired to act.

You are also invited to deepen this work or just derive satisfaction from understanding our chaotic modern world a bit better.

So feel free to use, share or build on top of this work, I just ask you to properly attribute (Creative Commons CC-BY-NC 4.0).

Here is some handy resources to get you started:

Cite this work:

Markolin P., “Denounce, deny, deceive”, March 1, 2023. Free direct access link:

Also, since this topic is close to my heart, I’d be happy to hear your thoughts. I’d be even happier to hear what you plan on doing next.

Twitter: @philippmarkolin (beware: Twitter is now hostile & anti-democratic information platform)

Mastodon: @protagonist_future@mstdn.science

Substack:

The struggle to defend science is now also the struggle of self defence, and youth especially learning how they can defend their struggle - against a brutal and totally committed capitalist state