Shadow hunters in the biohazard lab

A rant about influencers, bad-faith arguments, and how they fuel anti-science conspiracies.

Background:

The uncertain origin of SARS-CoV-2 has been a magnet for conspiracy theories, driven by many factors from domestic American politics, cognitive fallacies, social media incentives, geopolitics, and attention-stealing curation algorithms, to name just a few. In a set of earlier articles, I focused on two things: First, I outlined why the body of scientific evidence supports a zoonotic origin of SC2 and rules out any and all currently advanced laboratory-origin scenarios. Second, I explained how many systemic factors of our broken infosphere are directly involved in creating our current epistemic crisis; a phenomenon where large proportions of the population will find themselves regularly misled by popular online influencers (or attention grifters) on a scientific issue, be it vaccine safety, climate change or well, lab leak.

Just to get one thing out of the way: I do not blame people who might have found themselves misled. I think this would be unfair. I write this article because I am actually angry on their behalf. So much of making sense around the origins of SC2 discussion comes down to either having specific domain expertise, or deep and analytic familiarity with the dynamics of online conspiracism. If one happens to have neither, it depends mostly on whose voices one trusts in their information network, and what narratives appeal to personal intuition. And this is where the trouble starts.

Chapter 1: Amplification dynamics favor untruths

Why influencers are bad for science

Contempt for the conman, compassion for the conned.

We tend to hold ordinary people responsible when they hold silly beliefs. After all, who is in charge of their beliefs if not the holder themselves? The reality is of course more complex. Let’s do a little thought experiment:

A scam company is using false advertising to sell you a junk product, which you only realize by the time the product arrives at your doorstep. By then, the company is gone and so is your money. How much of you being fooled is really your fault? Maybe a little, because you should not trust no-name companies?

But what if this company was actually using a distributor you usually trust, like Amazon, and where you usually get good products and can reasonably expect so? I think many can agree that putting the blame on the victim that got conned in this case is often not fair nor reasonable.

Now let’s think about information not as something abstract, but instead, think about it as a special type of product we consume. It is in fact the main product in the attention economy (& targeted information drugs are the worst). If you buy junk information from an online grifter, you might be a little responsible for being so gullible. But what if the information you buy comes from your social network, where you usually get good information related to all kinds of other topics? How would you even know that one particular piece of information is junk? Usually, only when it comes down to some kind of test or reality check, when the product is at your door, so to speak. Well, let’s ask people who were on their deathbeds as to how they think today about the quality of the information they bought from anti-vaxx grifters (if they made it out alive, that is).

Information is a special kind of product because it shapes our beliefs about the world, we work with it to build our identity and guide our actions, often subconsciously. Believing junk information is often harmless, but sometimes, especially during a pandemic, it can be dangerous. So much anti-scientific junk has been sold in the last two years. That the virus is not real, that Covid-19 is like the flu, that masks don’t work, that vaccines are worse than the disease… we are all so sick and tired of it.

People do not deserve to die because they got sold junk information

Yet I hope you are with me when I say: People do not deserve to die because they got sold this junk information. British broadcaster James O’Brian has a nice phrase for that: Contempt for the conman, compassion for the conned.

So today, all my fury is directed at the conman, these junk sellers, namely anti-science influencers, attention- and actual grifters who abuse contemporaneous scientific uncertainty for self-serving motives. Those who deliberately create simplistic lowest common denominator narratives that appeal to intuition, or worse, play into fears and prejudices because it is good for their bottom line.

But I’m getting ahead of myself. Let’s start with the basics:

Influencers and grifters usually excel at one particular thing: Catching eyeballs and building trust with their audiences so they can then leverage that ‘special’ relationship into some kind of profit. It can be as indirect as through attention (and advertisements) on the platforms they use, or much more obvious, by selling subscriptions, books, or supplements.

But hey, not all influencers are as bad, in fact, most probably are decent people that provide a genuine value in their relationship with the audience, so sometimes, profiting is fine. Many influencers are and can be trustworthy when it comes to something they have domain expertise about, for example, video game influencers probably can be trusted when they say a game is worth playing/buying, because their reputation is more important to them than shilling for a bad game.

Others might be less trustworthy, for example, those who want to sell their own products, get sponsored to market other products, or are sponsored by foreign actors to spread politically motivated disinformation. It’s a messy world out there and who to trust on what topic is often difficult to parse.

However, when it comes to complex or complicated science, relying on influencers or individuals instead of institutions to make sense for us quickly becomes untenable and dangerous, as the pandemic has shown. Science creates knowledge and provides the factual basis for a shared reality where humans can cooperate. The op-eds have to come after the facts are on the ground, not before. We need and luckily have many scientific institutions where domain experts come together and through the scientific process of assessing all the evidence, start converging on a consensus position, with known boundaries to certainty and confidence.

The complete lack of supportive evidence does not stop many influencers from amplifying conspiracy fantasies

Influencers are almost always incapable of deriving a trustworthy consensus about a scientific matter by judging the evidence on their own, especially when most of the “hot take” influencers do not even have specific domain expertise in what they opine about. Nor do they have the patience to wait until more conclusive data emerges, as would be appropriate for scientific matters. Being first with a wrong ‘hot take’ is unfortunately way more profitable in the attention economy than being second with the correct take.

Having a take that is unique, emotive, outrageous, anxiety-inducing, or otherwise engaging sells better than deferring to experts or being constrained by the scientific consensus. That’s why influencers are often willing cooperators to dissident scientists who cannot support their theories with evidence within the scientific institutions (and therefore seek to circumvent them by going to the public directly). Their obsession with unique hot takes, coupled with their lack of expertise, also makes influencers an easy mark for pseudo-scientific grifters.

Being first with a wrong ‘hot take’ is unfortunately way more profitable in the attention economy than being second with the correct take.

If you ever contemplated tattooing any message on your body, consider this:

The complete lack of supportive evidence will not stop many influencers from amplifying controversial scientific theories of cranks. It is the salience of the contrarian narrative which matters to them in their quest for the most viral hot take.

(okay, maybe a bit too long for a tattoo… but still important to understand)

Obviously, anti-science influencers are often wrong in their takes on science, and eventually, their audience might catch on. So part of their craft is to formulate ‘hot takes’ that are either unfalsifiable or so open to interpretation that they can always reasonably pivot to a more defensible position. (An argumentative structure called the motte-and-bailey fallacy is often at the heart, but there are many other rhetorical tricks)

“Every option must remain on the table until it has been conclusively disproven”,

anti-science influencers often proclaim in defense of their hot takes on a scientific matter. This is when they cross over into conspiracy theory territory.

A scientific consensus is formed around supportive evidence for a hypothesis and in relationship to other competing hypotheses. The burden of proof lies with those who advance a take/hypothesis and must not to be shifted onto others to disprove it. Not every possible alternative hypothesis is scientifically valid until it has been specifically disproven, especially when the “competing theories” are unscientific or unfalsifiable in the first place, as ever-expanding cover-up conspiracies tend to be. Unfalsifiable because every lack of evidence just serves as more evidence of a cover-up, and every contradiction is solved with more convoluted explanations on how it might fit into the bigger conspiratorial picture.

“We may never know when the cover-up was extensive, so it will always remain a logical possibility” is a conspiracy argument as old as time, and usually false because people often underestimate the ingenuity and power of scientific inquiry.

Furthermore, conspiracy-prone influencers endlessly regurgitate every anomaly or cherry-picked piece of scientific evidence that can be tortured to align somewhat with the advanced conspiracy. All in the service as to keep their debunked pet theories alive despite a strong contradicting scientific consensus. While cherry-picking is neither convincing to scientists nor tolerated within the scientific institutions, it is often this malicious instrumentation of decontextualized evidence that is absolutely toxic to public discourse (more on that in Chapter 2).

You get the point, we all do. Once conspiratorial thinking has taken over, it becomes a self-sealing truism of confirmation bias, echo chambers, and motivated reasoning. Sprinkle in some apocalyptic messaging about the “big revelation” coming soon, et voilà.

On top of the first line of conspiracy drivers, unfortunately, our current attention economy will always make sure to supply enough influencers who willingly amplify anti-science conspiracies. This is because an influencer’s “hot take” incentives align better with outrageous garbage than with ‘mainstream’ science.

The dynamics are clear, but what about the triggers? How do people fall into these conspiracies, and who bears some responsibility? I already talked about some of the systemic forces at the root of these polarizing anti-establishment narratives in a different article, but not so much about the actors, because they are less important and somewhat interchangeable. Like there will always be somebody willing to fill the slots the outrage algorithms want filled. The who is way less important than the “how”.

So today, I want to drill down on those toxic communication tactics, exemplified by two currently ongoing funnels (one might call them “information entry drugs”) towards lab leak conspiracism:

Biosafety fearmongering and the virus hunter panic.

Strep in and re-calibrate your outrage meter, you might need it.

Chapter 2) Biosafety fearmongering & virus hunting panic

Does the person have a good understanding of the benefits, or are they just fearmongering about dangers?

There are many misconceptions about what viral work scientists do in a lab or outside when they go ‘virus hunting’, what protocols they follow, what oversight mechanisms exist, and most of all, what risks the work poses.

So let’s start with the obvious: Risks.

Researching viruses does include potential and actual risks to human health; because as we’ve painfully learned, nature is brimming with viruses that, if they ever find their way into human transmission chains, can cause havoc.

Now here is a point that is not often mentioned:

Not researching viruses comes with potential and actual risks to human health as well. Scientists need to acquire knowledge about these dangerous pathological algorithms that regularly cause disease and death in wild animals, livestock, and humans. To do that, they have to perform research on these viruses that is both informative and appropriate given the non-zero risk working with viruses pose. This is the reality we are living in and have to make our peace with.

In short, we always have to think about risk:benefit ratios; and while these are often complicated to assess in full for each experiment or research project, and also dependent on countless external factors, past, present and future, there is no argument that in general, virological research fulfills an important part in society. So whenever people try to sell to you that something is just ‘too risky’ to ever be done, I want you to mentally ask: Does the person have a good understanding of the benefits, or are they just fearmongering about dangers?

Consider for example that Covid-19 vaccines seem way ‘too risky’ to almost a third of the US population; because they can have unwelcome side effects, in rare cases serious health complications, in the rarest of cases even trigger a chain of biological events leading to deaths (e.g blood clots). In a vacuum, it just seems too risky to subject people to a Covid-19 vaccination, right?

Well, of course not, because we don’t live in a vacuum but in a pandemic with a deadly pathogen that kills on average around 1% of the people it infects, and cause serious long-term damage to about 30%, and makes most of the rest pretty sick, often for weeks. That’s the reality where the risk/benefit of vaccines makes a lot of sense.

Anyways, back to virological research more broadly. Here, assessing risks versus benefits is much harder, but let me try to give some ideas on how to extrapolate.

Pandemic frequency

Often, the risk/benefit get framed in a strictly pandemic setting, like virological research increases risks of pandemics, but has so far failed to prevent a pandemic, so we should stop. This is too simplistic, because pandemics are about frequency and severity (how often do they occur and how much harm do they cause). Again, we find ourselves not living in a vacuum but in a world where pandemics are a regular occurrence, no matter if virological research happens or not. If virological research can either A) reduce the frequency of natural occurring pandemics or B) reduce the severity of natural occurring pandemics, the risk of doing work running in danger of also causing a pandemic might still be worth it. I said “might”, because here it comes down to smart people (usually domain experts, not internet trolls) coming together and charting a path forward that aims to maximize benefits of said experiments while reducing risks as much as possible.

One cannot aim to find something new and useful without exploring many avenues, including some ‘riskier’ ones

Knowledge saves lives

Pandemics are however not the only consideration when talking about viral research. Having knowledge about the viral enemy is usually better than being blind to it, even if it is not always clear a priori what knowledge might be helpful in the war. As we have already seen, researching viruses can save lives in many ways, from aiding the development of drugs or vaccines to new classes of therapeutics (viruses are the main delivery vectors for the current gene and cell therapy revolution in biotech). Technically, the latter are all “Gain of Function” research, after all, we are equipping viruses to better target human cells.

Of course, researchers do it with genetic cargo that is beneficial to us and with viral backbones already so watered-down that they don’t have pandemic potential any more. Current delivery methods are also heavily adenovirus based, which do pose almost no epidemic risks (although sporadic adenovirus epidemics have been reported before).

We can also imagine a more basic scenario: just think about finding a virus in the wild whose mechanisms/tropism can be repurposed to target specific human cell types, including cancer cells. The therapeutic benefits would be enormous and certainly count towards the “saving lives” benefits of the risk equation. Speculative, of course. (Oh wait, we’ve been doing that for a while… here is an example mimicking deadly rabies virus capsid to target brain tumors… I wonder if we would’ve ever found this one without virologists studying the deadly rabies virus? *panic* ). Again, knowledge is useful, it is about minimizing risk versus maximizing benefit.

What about economics?

In the lead-up to SC2, another pandemic hit China hard; but not it’s people, but it’s pigs. The African swine flu virus eliminated 40–60% of all pig livestock, causing massive economic damage, leaving prices for meat soaring and causing a massive drive to find alternative sources for meat; including wildlife. How that demand for wildlife might have contributed to facilitate circumstances favoring zoonotic jumps, including the emergence of SC2, is still up in the air. SC2 is of course a problem not only for humans, but animals too, leading to the mass culling of minks for example. More traditionally, it is the poultry industries that get regularly disrupted by new emerging variants of avian influenza, causing the mass slaughter of 250 million poultry in the last 15 years alone, again with dramatic costs to economies and food security. Investigating viruses, especially those pesky ones that have pandemic potential, is necessary to understand how to mitigate damage to our livestock in the future. Again, pandemics will happen and cause these terrible damages no matter how hard we shut down what virologists do, but maybe allowing them to research these viruses will lead to some better solutions in the future? Understanding what viruses are out there might also be relevant for protecting certain natural ecosystems (at least from human exploitation), but then this discussion becomes philosophical.

I guess the point I really want to make is: research is not always linear, nor are research benefits always obvious in the short term.

All I can offer as a heuristic: One cannot aim to find something new, unexpected or useful in research without exploring many avenues, including some ‘riskier’ ones. This is the bane of all knowledge creation to a certain extend, knowledge empowers us, but power comes with the need to navigate risks:benefits.

Does that mean we have to kiss our loved ones goodbye anytime the ‘virologists’ are at it again?

Risk:benefit in virology is a complex equation balancing many factors, past, present and future. Research that was more risky in the past might be performed today with clear risk:benefit ratio. Inversely, research that people thought “mostly harmless” can turn out to include underappreciated risks, shifting the risk/benefit ratio. Again, this is true for all technology. Nobody at the big tech companies thought that optimizing some ranking algorithm would cause potentially permanent damage to the social fabric, even contribute to fueling atrocities like genocide or harm everyday teenage girls at a mass scale. The threat of a pandemics is just something very direct and visceral at the moment, understandably, so any virological research feels like it might not be worth the risk.

On top of that, making perfect estimations of risk:benefit that are eternally valid is impossible, there are just too many moving pieces. That’s why we need dedicated scientific institutions and domain experts to come together regularly and figure out the rules of conduct as we go along and learn more, not social media grifters, cranks or influencers to proclaim their “hot takes” as self-evident truths to the public.

Okay, enough of the explaining already, this was supposed to be a rant.

I think you know anyway where I was going with this: Directly down the online toilette.

We’ve finally entered the realm of decontextualized attention-grifters, a space where the mixture of legitimate public concerns, ever-moving scientific discussions, and real uncertainty provide a rich field for gaslighting and scaring audiences into submission. Please consume with caution:

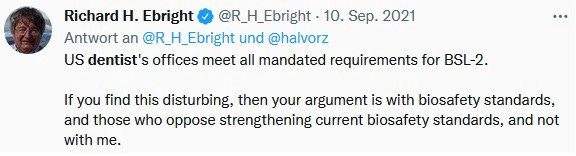

So just let me give you a quick reference frame on biosafety levels, consider BSL-1 as ‘safe’, BSL-2 as ‘very safe’, BSL-3/4 as ‘very, very safe’. These laboratories are controlled environments where scientists sit with gear and equipment in their air-controlled biosafety cabinets to usually pipette a few drops of liquid from one plastic dish into the next. Not exactly Mad Max here. Even if the liquids contain deadly viral particles that would be airborne if coughed out by a host, they pose no real danger to ever infect the human operator unnoticed. Some catastrophic accident like self-stabbing and injecting the virus particles with a syringe would be required to infect a human, and then, well, the researcher noticed and probably crapped his pants. But then we are still not at a lab leak, because for containment breach, the researcher would then have to deliberately walk out of the lab without telling anybody, without going to the doctor and without being noticed, rather than self-contain and tell everybody because you crapped your pants when the needle went in. But even after a containment breach, there are still multiple steps to cause an outbreak, for example, moving into crowded places & being infectious enough to get enough H2H transmission chains started before the outbreak runs itself out… crap, I’m explaining again.

Fact is, most pandemic pathogens would still die out at this point, and even SC2 might have easily died out if it leaked from a lab and just infected a single human, but we were unlucky that it came from this highly infectious animal population at the market that caused multiple spillovers (at least 2, up to 15) over weeks until enough humans were infected so that the outbreak became a self-sustaining pandemic. (I can’t help myself, please bear with it)

Accidents can never be completely avoided, in BSL-2 as well as BSL-4, but that does not make them likely or the work inherently unsafe. The BSL-2 work environment is very safe for many alleged “risky” experiments, it is just not completely risk-free, and neither is BSL-4.

Plane accidents happen too, but that doesn’t make flying inherently unsafe, just not risk-free (still saver than getting in the car by a long shot). But humans and fear of dying is just something primal, we can’t help ourselves. I mean, the probability of eventually dying is like 100% for humans, how we lose our life is often beyond our control. Does that mean we have to kiss our loved ones goodbye anytime the ‘virologists’ are at it again? Of course not, because as the smart cookies of you have by now learned, keeping benefits > risks means that virology saves lives on average. (If you want to go full dark mirror and believe in multiple universes: Overwhelming odds are that you are also born into one where virology saved you from dying in a pandemic rather than caused a pandemic that kills you.)

None of this is to say that we should just make our peace with the current status quo on biosafety, of course not. Scientists have to constantly monitor, assess and minimize risks while still getting beneficial work done. They need institutional regulations, oversight, best practices, training, equipment and of course feedback and support from the wider population also plays and important role.

We need dedicated scientific institutions and domain experts to come together regularly and figure out the rules of conduct, not social media grifters, cranks or influencers to dictate their “hot takes” as self-evident truths.

The “controversy” around biosafety levels in labs is of course not about a real disagreement on biosafety protocols between BSL-2 vs. BSL-4 (for that, the influencers and cranks would have first to study to understand what they entail in detail, and who does that in preparation for some sassy tweets, right?).

Their whole spiel is more about creating a feeling that something “untoward” and dangerous is happening in labs all around the world. They give you two points and want you to connect the dots they want you to connect, not the whole picture. Once that fear they sold you has settled in, you will certainly pay more attention to them and their takes, which is what truly counts in the attention economy.

Do you know what works even better than unspecific fear-mongering in the attention economy? Directly naming who is to blame.

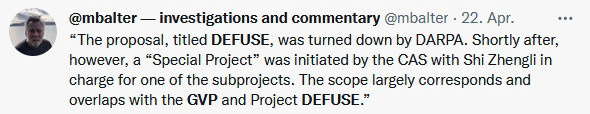

Enter Great Reset… I mean the global virome project (GVP)

Do you rather let the viruses have a go at humans first, or do you prefer humans have a go at studying them?

What could be better to decontextualize than some ambitious multi-nation project about bat surveillance in SE Asia, partially funded by China, which seems to tangentially coincide with the timing of the outbreak in Wuhan when you squint your eyes hard enough?

Unlikely coincidences, real or perceived, is of course the driving force for any conspiracy. The sugar rush to their keto diet, so to speak. Often, these coincidences are not really that interesting or relevant, but rather the product of anomaly-hunting; a special form of cherry-picking in favor of supporting one’s pet theory.

Back to the GVP; the whole idea behind boils down to: Do you rather let the viruses have a go at humans first, or do you prefer humans have a go at studying them?

If virus hunters had found and characterized SC2 well ahead of time, maybe the pandemic would have never unfolded the way it did? We will never know because the project came too late for this pandemic to even have a shot. Knowing what types of viruses are out there, and where they can be found or likely spill over to humans can be a reasonable tool in the pandemic prevention kit, but then again, the risk/benefits have to be worked out by domain experts and institutions, not fearmongering halfwits going for clicks.

Vicious personal attacks from dissenters can make any discussion so painful that scientists are just driven away from the public sphere (also known as Heckler’s veto)

That does not mean that there are no criticisms coming from the scientific community about these projects, but they have nothing to do with drumming up unreasonable panics about virus hunters causing pandemics, but more with feasibility of project aims and resource distribution. For example, this nature article argues that while knowledge can certainly be gained from investigating viruses in nature, doing so with claims of “being able to predict & prevent” pandemics like a viral weather report is naive scientifically and also misleading to the public. They authors criticize that the GVP endeavor is certainly not worth the exorbitant sums being pushed towards it compared to more effective, but maybe less sexy, pandemic prevention programs like population surveillance. (Proponents of GVP say this is a false dichotomy) The nature article however does not proclaim negatively about the work of “virus hunting” overall and acknowledges its merits, in fact, one of the authors (E. Holmes) is probably among the most renowned virus hunters there is. They just don’t think the lofty prediction claims of the GVP are realistic… I know, the whole argument sounds a bit too dry and technical. Boring! Well, don’t worry, I have some hot takes for you:

If any of that fearmongering sounds familiar, might I remind you that we have played through the first wave of these artificial panics already; namely the fearmongering and gaslighting about ‘Gain of Function’ research leading to some awkward political cringe fest. (seriously, no time to rehash that crap)

As I lamented before, we put scientists in a difficult position when we want them to debunk scientific conspiracies, only to then leave them alone to fight an army of trolls who are not interested in truth but signal boosts. Vicious personal attacks from dissenters can make any discussion so painful that scientists are just driven away from the public sphere (also known as Heckler’s veto).

Engagement with bad-faith actors of any kind is of course difficult, especially for scientists who just want to educate/inform and are not familiar with these social media gamified attention-grifter tactics (one might abbreviate them to SM-GAG tactics, but maybe the visual image is not age-appropriate here, though certainly indicative of the type of relationship scientists find themselves in on grifter territory)

No matter how the ‘argument’ goes, scientists are left with a lot of frustration, whereas anti-science influencers got the signal boost they wanted.

Here are the varieties:

The scientists ignore/block me when I raised important questions about biosafety? Why aren’t they answering legitimate questions of public import? Because they have no good answers! They know they are wrong!

‘The scientists do not agree with the issues I raised about biosafety, virus hunting or GoF, so they must be biased/stupid or have a huge conflict of interest. Can people so obviously wrong really be trusted about anything?’

‘The scientists agree with me that some of the above narrow issues are indeed risky, so I have a lot of credibility now! (That I’ll leverage by quote-tweeting to everyone when I spout more evidence-free garbage next chance I get)’

Agree, disagree or ignore can and will be used against you. Can’t ever do right in conspiracy land, can we?

Well, there is a method to the madness:

Chapter 3) Channel the created unease to sow doubt about science and the scientific process

All this panic stirring around lab biosafety and virus hunting has of course only one real goal: To breathe some attention back into the scientifically dead hypothesis that SC2 was created in or escaped from a lab. Well, I saved the ‘best’ for last, of course.

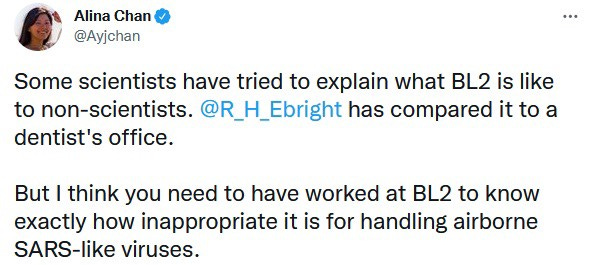

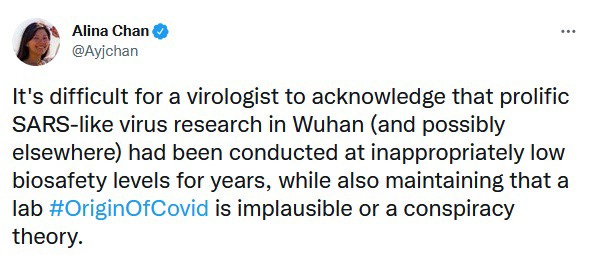

Alina Chan alleges, of course without evidence, that biosafety measures for the research at the WIV must have been insufficient (they were not, best we can tell), but since she (and other prominent lab leakers) seeded that particular doubt and fear, how does the public feel now about their pet theory that SC2 escaped from a lab?!

These types of fearmongering techniques work because they leave a visceral impression that maybe we should not feel all too confident about labs, and because we ought not to feel confident about labs, can we really EVER be confident to say that a lableak did not cause the pandemic? Psychologically, it’s a pretty neat emotional manipulation, but please engage your analytical mind again: Biosafety overall and the questions about the origin of SC2 are not really related and certainly not dependent on each other.

In fact, labs could leak pathogens all the time, and still the scientific consensus on the origin of SC2 would not move, it just doesn’t factor in that way given the whole body of evidence. The WIV could have a 100% leakage rate of all their viruses and it would still not make the lableak theory suddenly square with the evidence. As I’ve argued before, we do both scientific discussions a disservice by linking them together.

Alright, but did you really catch all the nuance in the tweet above? Let’s look again, there is a lot to unpack.

Alina Chan not only asserts that lab leak is still a viable theory, she also insinuates that virologists are doing something very wrong and untoward; they allegedly lie about the dangers of biosafety so they can continue deliberately ignoring and discarding the possibility of a lab leak. This is a very crafty framing.

First, it feeds of course into the false trope that has been one of the driving pillars around lab leak conspiracism: Scientists don’t want to investigate the lab leak theory. Which is of course BS. It’s 2022, and over the last 2,5 years, countless scientists have engaged in investigating the origins of SC2 directly or contributing to the body of evidence by studying the properties of SC2. A scientific consensus has formed, as multiple lines of evidence emerged supporting a natural origin hypothesis and disproving any conceivable laboratory leak scenario. But yeah, let’s go with ‘ignore’.

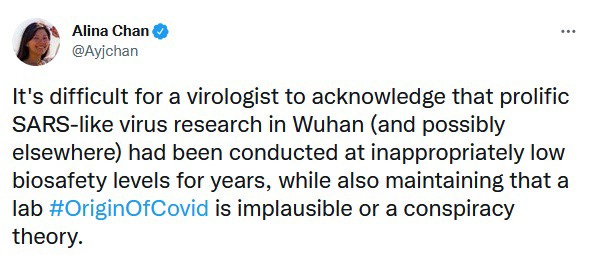

Second, it offers up a clear target to blame for people who were already made nervous by her biosafety fearmongering: ‘Look at those reckless virologists working in inappropriate conditions and causing the pandemic!’ This is of course a common theme for her, sometimes more, sometimes less explicit.

Third, by insinuating scientists are dishonest or liars, she builds up towards ‘sinister motive’. ‘Why would scientists lie about something or deliberately look away? Are they involved? Do they have something to hide? Can’t really trust them, can we?’ Damn, she is pretty good at these subtle manipulations, isn’t she? I’d certainly take her expertise on viral influencing seriously. On virology? Not so much.

Conspiracy theorists come in many shapes and forms, and some are more dangerous than others.

I am of course left to wonder: What motivates a trained scientist like Alina Chan to make false claims that there is no scientific consensus and create lies about how virologists as a collective are reckless and immoral? Is she a true believer of her fabrications or riding cynically the wave of social media conspiracism to fame?

One can only speculate. My intuition is that at some level, she is smart enough to understand that the ever-expanding body of scientific evidence is not compatible with any lab leak theory anymore. She got a lot of attention being a dissenting voice during a time of scientific uncertainty (when lab leak scenarios causing the pandemic were possible) and is now motivated to stay relevant. Unfortunately for her, the lab leak discussion is closing and beyond that, she has nothing to contribute on the origins of SC2. Her best play is to keep the uncertainty high (at least in the public sphere) so she can maintain her perceived relevance and/or pivot to adjacent topics (ergo biosafety) where she can keep scamming her audience. She does not particularly seem to care that her actions are poisoning public dialogue given her heavy use of deliberate obfuscations, misrepresentations of science, and mudslinging toward virologists. It is not uncommon for conspiracy-prone scientists to circumvent scientific forums entirely when they can not show any evidence for their theories. Despite this, she clearly likes seeing herself in the role of a hero-martyr (sorry, “extra heroic”).

This delusional self-imagine is of course not dissimilar to other scientific dissenters who get swept up in anti-science conspiracy fantasies, take for example climate change deniers:

Well, like I said, these are my speculations and there are certainly multiple layers to peel back if one wanted to. For example, Alina’s need for attention and validation might also have something to do with the fact that she felt professionally scorned about her work not having merit or being taken serious scientifically.

Ah, on top of that, before I forget, she also has a lableak conspiracy book to sell with fellow lableaker, AIDS-origin conspiracist, and climate change dissenter Matt Ridley.

So maybe her behavior is also driven by some financial incentives, who can say for sure?

Needless to say after my introduction of him, I am also very much not a fan of her co-author Matt Ridley, I mean seriously, how many times does this person have to be proven a liar over the course of his career for the public to notice? (Coal baron Matt Ridley has such an abysmal track record of being on the wrong side of science to sell profitable stories, it would blow up this article to even address some bits of it, and seriously, who cares about what these old conspiratorial geezers say any more? The interested reader might enjoy this rant by somebody else)

Before we continue, there is one thing I want to make clear:

I have been torn about directly naming prominent lab leakers, because singling out individuals (even horrible ones) engaging in certain conducts might boost their profile so they get to spread misinformation to even more people, or worse, has the danger of pile-on harassment and other unhealthy dynamics that come with the attention economy.

It certainly is a conundrum that I do not take lightly and I want to explicitly state that I do not condone any harassment directed at the people I selected in this article; I’d much prefer people who read this to decide to mute these influencers and then go on with their day, and tell their friends to do so too.

So here I would also like to explain why I decided to do so now with Alina Chan, and it has less to do with her beliefs, but everything with her behavior and actions. In my opinion(!!!), Alina Chan plays a critical role that is fueling lab leak conspiracism and causing real harm. She lies effortlessly and is soft-spoken, she manages to dress toxic arguments into a veneer of scientific language and barely backs herself in a corner that exposes her actions, as too many of her conspiratorial colleagues do. This makes her more credible to the public (at least on the surface), and keeping her audience’s trust is online currency to do real harm in the attention economy. And she is doing harm, even by her own admission, in a rare bout of self-reflection:

In my opinion(!!!), Alina has been influential because she plays a very subtle game; always using (& abusing) scientific uncertainty and ‘just asking questions’ methods to create ground for doubt in public perception; which is then filled by others [less smart & conspiracy prone] with fantasies, which she also endorses and amplifies.

I believe the fake scientific veneer and behavior she displays when talking about lab leak is qualitatively more dangerous than supporting randos shouting on the internet, because it is not only corrosive to the public perception of scientific institutions and processes, it can also undermine these directly.

Being threatened by a social media troll army every time an influencer puts a spotlight on you or your research intimidates scientists and hampers the scientific process.

In the above thread, she tries to either influence peer-review or discredit institutions if they do not adhere to her specific (and baseless) criticisms with the actions she proposes. She also basically insinuates ‘scientific misconduct’ by the authors of the study, but we know by now about these subtleties of her messaging, right?

This is not new for her. Just in December, she called upon the scientific Journal Nature to add an addendum to a paper because she alleges it came about by a conspiracy (which is first not true, and second irrelevant, because science is about the veracity of the data in the paper)

Her behavior does not only interfere with standard scientific conduct, it might also actively intimidate scientists or scientific editors and stifle scientific research. Ah wait, I forgot scientific journalists. They are on her list too:

Being threatened by a troll army every time an influencer puts a spotlight on you or your work is one thing, another is the fact that scientific uncertainty belongs to science and is not there to be abused by online grifters.

By weaponizing uncertainty beyond its bounds, fear of misrepresentations might stifle scientists from publishing workable conclusions on controversial topics or might lead to self-censorship of their work. After all, there will always be some nitpick to be found which might expose scientists to political attacks by motivated actors.

Silly scientists talking only about evidence, instead of pre-bunking eventualities, right!?!

This last point seems abstract, but its effect becomes palpable when you start reading papers that sound like they are written by lawyers reducing exposure, rather than by scientists who want to create knowledge. Anderson et al., ‘Proximal origins of SC2’ is a prime example of how scientifically-written papers can become a ‘liability’ for political actors to abuse.

It was written by scientists stating that they find no evidence for any artificial tampering with SC2 in the genome, therefore they think it is not very plausible to postulate a lab leak as the origin. The article came out in early 2020 to give people a quick scientific take on the origins of SC2 and has since held up remarkably well. But because Proximal Origins was not written by lawyers, but scientists, it’s conclusions were viciously attacked.

For example, the paper did not go out of it’s way specifying for the public that the evidence against engineering and intentional design is much stronger than other conceivable ‘accident’ scenarios that might involve a collection or sampling of a natural virus, because remember, there was an “engineering bioweapon” conspiracy going round in MAGAverse?

Another example is that it was not navigating language misinterpretations like what do they mean by saying “plausible” or “parsimonious” ahead of time, so it got seized upon by motivated actors and taken however they needed to falsely claim it “killed” scientific discussion on lab leak.

Silly scientists talking only about evidence in their scientific papers, instead of pre-bunking all conceivable eventualities conspiracy theorists might come up with, right? I guess they deserved what was coming!!!!

It is my belief that science ought to be done based on evidence and reason, not as a debunking and pre-bunking exercise to try to avoid parts getting cherry-picked by motivated actors for their evidence-free conspiracies. Science also deserves the freedom from political or social pressures to search for what is true, rather than convenient.

But maybe I’m just too old fashioned in that way.

Conclusions

There is a saying: All roads lead to Rome, but in today’s world, it would be more appropriate to claim all social media ‘information highways’ lead to conspiracy. The more outrageous and attention-gripping a take, the more likely it will be shared and engaged with.

Social media attention-grifters have finally realized that there is no punishment for being catastrophically wrong on social media, and no reward for correcting the record or changing one’s mind. [Why do you think there is so much talking about “grooming” now?] Doubling down on a pet conspiracy retains one’s audience, leaving the conspiracy behind is seen and treated as audience betrayal.

Is it really a surprise that the most shameless have advanced the furthest in this ecosystem?

Influencers profit from keeping a scientifically-dead, but emotionally engaging zombie hypothesis alive, and this will continue to cause problems for science and society.

/end rant

While rants tend to do well on social media, I have very little illusions that my words will wake anybody up. That’s okay, maybe me sharing my frustrations was somehow useful to you.

References:

People, this was a rant…

what sources did you expect?

“Chan et al., Journal of social media grifting, 2022?”

Seriously? Well okay, I’ll do some disclaimers:

Rants like this are opinion, and while I certainly made sure to not deliberately misrepresent the views of people to the best of my knowledge, you will have to trust my judgement that I was not cherry-picking tweets to dunk on them, but that these are just representative of a larger sample size and my longer interaction experience with the actors.

Personal judgements are not always reliable, so feel free to get more opinions

However, on the larger frame about the toxic communication tactics, there actually is some good scientific literature on, you might want to check Lewandowsky & Cook for example. Climate scientists and sociologists have been playing these games for decades and now I feel awful for them.

Don’t hate the players, hate the game. While there is some individual responsibility these grifters hold, they are also products of the algorithmic environment that warps all our minds. Spend your energy on changing the system, not harassing people that are awful in it. There is a mute button, you know?

Thank you for raging with me, see you next time with some de-personalized #scicomm again!

Thank you thank you for taking the time to write this! I share your frustrations as a virologist studying SC2 and trying to navigate social media. The last two years I’ve had a PSYOP war with myself over a desire to educate the public and fight the bs or just stay quiet and focus on my science because I’ll get attacked and won’t change their mind anyway.

Thank you for writing this. Its telling that the lab leak (LL) origin of COVID-19 is almost exclusively argued within the Twitterverse while a zoonotic origin is consistently tested in the scientific literature. Of course, to the LLs, that just means that the scientific literature is corrupt or biased, or whatever non-evidence based argument they can put forward.