The AI Race to Reboot Feudalism

Let's be honest about why they gamble everything

Note: This article is a first essay out of my larger research focus on building participatory democracy in the digital age: how to counteract the global authoritarian movement. Given recent AI news and a sense of urgency, I wanted to share one idea that maybe is worth a discussion today.

Unease about the AI investment boom is everywhere

The Atlantic’s excellent article by Charlie Warzel and Matteo Wong “Here’s How the AI Crash Happens” inspired something that has been on my mind for quite some time. I will get to that in a bit, but let’s recap:

Quoting from the article:

“The AI boom is visible from orbit.” Fields in Indiana now host vast data centers that “demand more power than two Atlantas.” The U.S., they argue, is becoming an “Nvidia-state,” where one chipmaker has become a “precariously placed, load-bearing piece of the global economy.”

Despite hype, profits lag: “Nearly 80 percent of companies using AI discovered the technology had no significant impact on their bottom line.” Analysts whisper “bubble.” OpenAI lost $5 billion last year; Microsoft’s AI losses topped $3 billion.

The article likens today’s frenzy to “the canals, railroads, and fiber-optic cables laid during past booms.” Whether AI transforms the world or triggers a crash, the authors warn, “either outcome will bring real, painful disruption for the rest of us.”

It paints a vivid picture of America racing headlong into an AI-driven infrastructure boom that could reshape—or destabilize—the global economy.

In a matter of years, AI infrastructure has come to dominate American growth. Vast energy-hungry data centers rise from farmland; trillions flow into chips and computing power. I think the authors point out, correctly, that the economic return remains uncertain, I recently argued a similar point about AI and productivity loss and why that might be. So rapid economic windfall justifying the investments seems very unlikely. Like many others, the authors point to the dot-com and other infrastructure bubbles; history suggests such frenzies rarely end smoothly. Whether the AI revolution fulfills its promise or collapses under its own weight, they conclude that shock will be profound, and the United States may already be too invested to turn back.

For me, there is no real question whether this is a bubble, of course it is. But everybody seems to think they can ride it long enough to be not harmed when it inevitably bursts.

As The Atlantic article pointed out, the bigger the bubble gets, the more people will get harmed by the ensuing fallout. Yet hunger from the companies for finding ever more convoluted, reckless and possibly illegitimate ways for money to be poured into their ecosystem to stretch the AI bubble just a bit longer is palpable.

And if the dynamics also sound familiar, it’s because not two decades ago, the Great Recession was precipitated by banks packaging risky mortgages into tranches of securities that were falsely marketed as high-quality. By 2008, the house of cards had collapsed. - The Atlantic

Which is why, when the WSJ just a few days ago reported that the OpenAI CFO calls for a federal backstop, more than a few eyebrows were raised by investors. The comment was followed by a quick supposed walkback from the CFO on LinkedIn, but not really since she just rephrased the point more politely about the government having to “play its part”. Privatize the win, socialize the losses I guess.

All while the systemic risk of a major recession increases by the day.

So why are we allowing them to take the gamble?

Know the game before you gamble

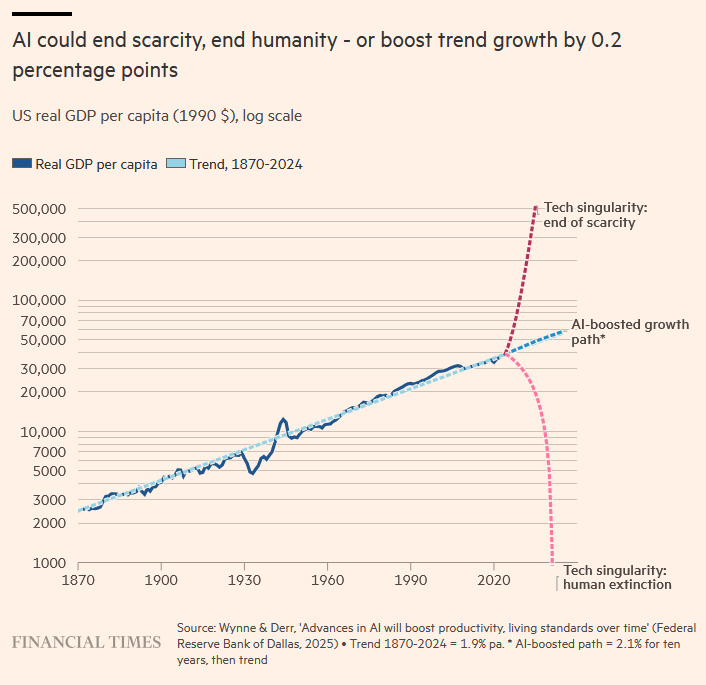

Several stories are told of why the current AI race, despite being a bubble, needs to happen as fast and recklessly as it does, no matter the cost, harms or methods deployed. That China will otherwise win the AI race is one such current crowd pleaser, especially out of the CEO of NVIDIA who coincidentally would gain the most by stoking such US anxieties. Or that AI will unlock untold productivity and lead to real value creation in every sector of the economy soon. Well, I am more of the persuasion that AI is a normal technology, useful and transformative in the long run, but not magic. It seemingly follows a normal S-curve of innovation and people overextrapolate from the current slope while ignoring that we are already seeing some signs of a plateau. But whatever your belief in economic gains in the mid to long-term, I would certainly like to retire the bedtime story that today’s AI boom is a moonshot for machine sentience, and not only because these graphs look immensely silly:

The current AI paradigms will inspire innovators to find applications that actually add value to various industries; but they can not usher in AGI, singularity or extinction. And yet, a combination of these stories and their penetration of the public infosphere, especially the part about imminent or future superintelligence, ushering in various visions of utopia or dystopia, seems to inspire investor FOMO and public imagination.

So let me challenge that frenzy with a different story, one I believe to be more grounded in history and reality, and in many ways more urgent:

Consolidation of power through infrastructure capture, rent extraction, system lock-in and autocratic information control.

It’s the robber barons, rails, rents and defaults of the next twenty years I worry about, not skynet, the singularity or misaligned paperclip optimizers.

Infrastructure ownership: it’s the rails, not the robots

Infrastructure capture refers to the consolidation of essential systems, such as transport, energy, communications, or now AI tech stacks, under the control of a small number of private actors. Historically, this pattern has produced enduring power asymmetries. When a resource becomes indispensable for competition in the market and cannot be reasonably duplicated, its owner wields structural power. When public infrastructure gets privatized or commodified into a corporate chokepoint, those who own it do not merely extract rent; they set the terms of participation in economic and civic life.

A classic example is the Gilded Age railroad monopolies in the United States. By the late 19th century, a handful of companies controlled the rail network, enabling discriminatory pricing and political leverage while producing the largest wealth inequality between a small elite and masses in poverty.

We have seen the same more recently with the big social media platforms, that for too long were treated as consumer products, when in reality they aimed to become the roads and bridges of digital life for most of us while extracting a heavy rent on our attention. That rent did not only make them filthy rich but also extremely powerful and politically influential, enshrining continued exploitation of their users data as their business model.

AI infrastructure magnifies these stakes, as AI tech stacks are becoming the interface to information creation, dissemination and consumption. If a few companies own the chips, data centers, cloud compute, foundational models, model weights, and distribution channels, they effectively control any future useful AI application build on top of it; and with it, the epistemic and economic arteries of the 21st century.

Public infrastructure capture is de facto governance, only without consent of the governed, oversight, or accountability.

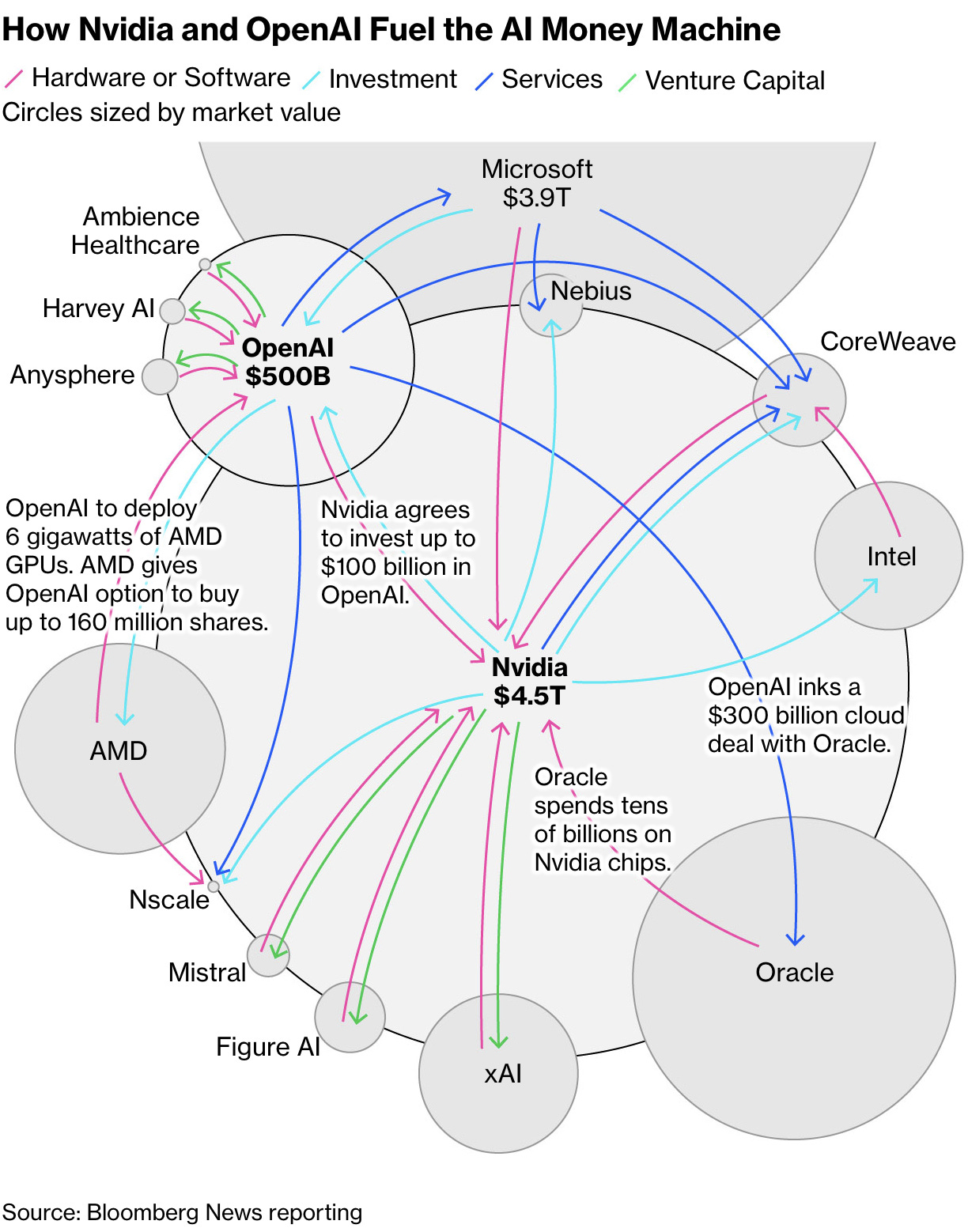

The gamble to become the next Vanderbilt, Rockefeller or Carnegie’s of the info age are long underway. Current tech giants have already captured chips and cloud computing, that is why Nvidia’s data‑center revenue hit $18.4 billion in a single quarter—up 409% YoY as AI hyperscalers started hoarding H100 chips like potable water before a drought. Amazon plowed $8 billion into Anthropic so Claude will use Amazon Web Services (AWS) as its primary cloud and training partner, and will utilize Amazon’s custom AI chips. OpenAI will purchase an additional $250 billion of Microsoft Azure cloud compute and locked its model distribution to Azure’s cloud for the next decade.

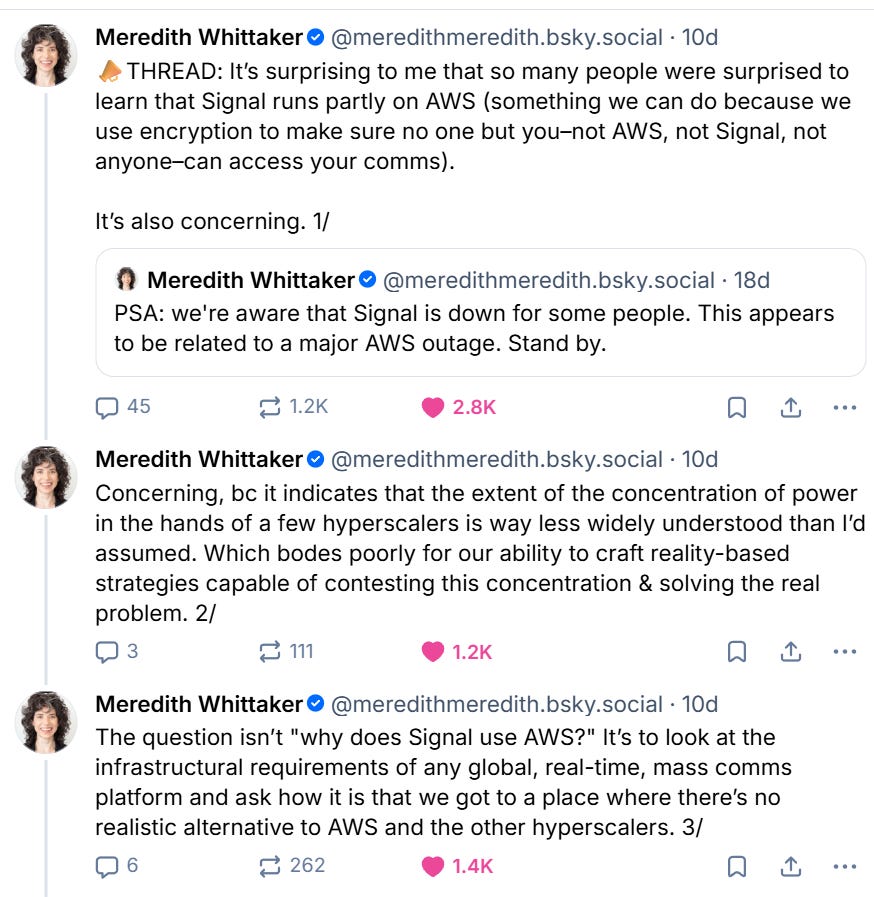

Such infrastructure costs billions and billions of dollars to provision and maintain, and it’s highly depreciable. In the case of the hyperscalers, the staggering cost is cross-subsidized by other businesses–themselves also massive platforms with significant lock-in […]

In short, the problem here is not that Signal ‘chose’ to run on AWS. The problem is the concentration of power in the infrastructure space that means there isn’t really another choice: the entire stack, practically speaking, is owned by 3-4 players.

This brings us to the next point:

Strong-arming society into technological lock‑in

Infrastructure capture is not just about finding one bottleneck to own, because bottlenecks can be remedied with just building more capacity over time through market competition or government investment. Real infrastructure capture today is much more about owning the full value-chain, tech stack or ecosystem. That is why the current AI players aim to cement vertical control—chips → cloud → model → API—where each layer enforces the others and makes competition almost impossible. The current AI web of partnerships and strategic investments have only one goal: to entrench big tech incumbents across multiple layers of the value chain.

Good luck trying to spin up an alternative service provider when technical incompatibilities, contractual exclusivity and prohibitively expensive user switching cost sabotage any type of fair competition.

Historical examples include AT&T’s telephone monopoly and Microsoft’s bundling of Windows and Internet Explorer in the 1990s, both of which stifled competition until antitrust interventions, and they seem really tame compared to the AI tech stack capture we observe today by what is basically an industry cartel.

A captured tech stack puts handcuffs on all of society; stifling innovation and making economic alternatives unviable.

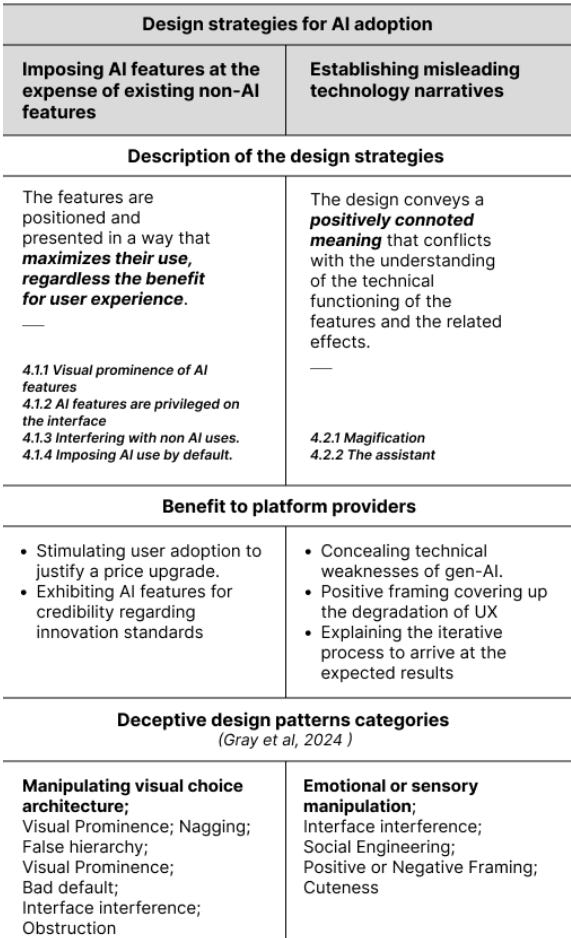

One idea we have to get rid ourselves of is the thought that technological lock-in just happens as a feature of innovation or first-mover advantage by offering new technological capabilities. No, these are deliberate business decisions as rapid adoption is the biggest challenge these companies face, they need to race to entrench themselves before the dust settles.

We can feel their urgency by opening any app these days. They simply put their AI anywhere so people can not escape it. Again, this is not coincidence:

Here is an article by Brian Merchant puts it succinctly: How big tech is force-feeding us AI.

By inserting AI features onto the top of messaging apps and social media, where it’s all but unignorable, by deploying pop-ups and unsubtle design tricks to direct users to AI on the interface, or by pushing prompts to use AI outright, AI is being imposed on billions of users, rather than eagerly adopted.

When your AI business model is to extract rent and exert power through infrastructure and technological lock-in, you better find a million ways to force people to adopt your AI stuff, no matter how crappy, immature or outright unsafe, by any and all means necessary, even leveraging the Trump administration to pressure EU regulation.

Technological lock-in is key because it ensures dominance of tech-turned-AI giants for generations to come. And we know that dominant firms face little pressure to improve quality, consumer protection, or maintain reasonable prices. Instead, once we are locked in, the real squeeze can start.

Preparing for the big squeeze with data colonialism

AI models are built on collective data: the internet’s archives, social media posts, public domain works, academic research, and user interactions. Yet, as these commons are appropriated into proprietary model weights, they cease to be shared public knowledge and become enclosed corporate property.

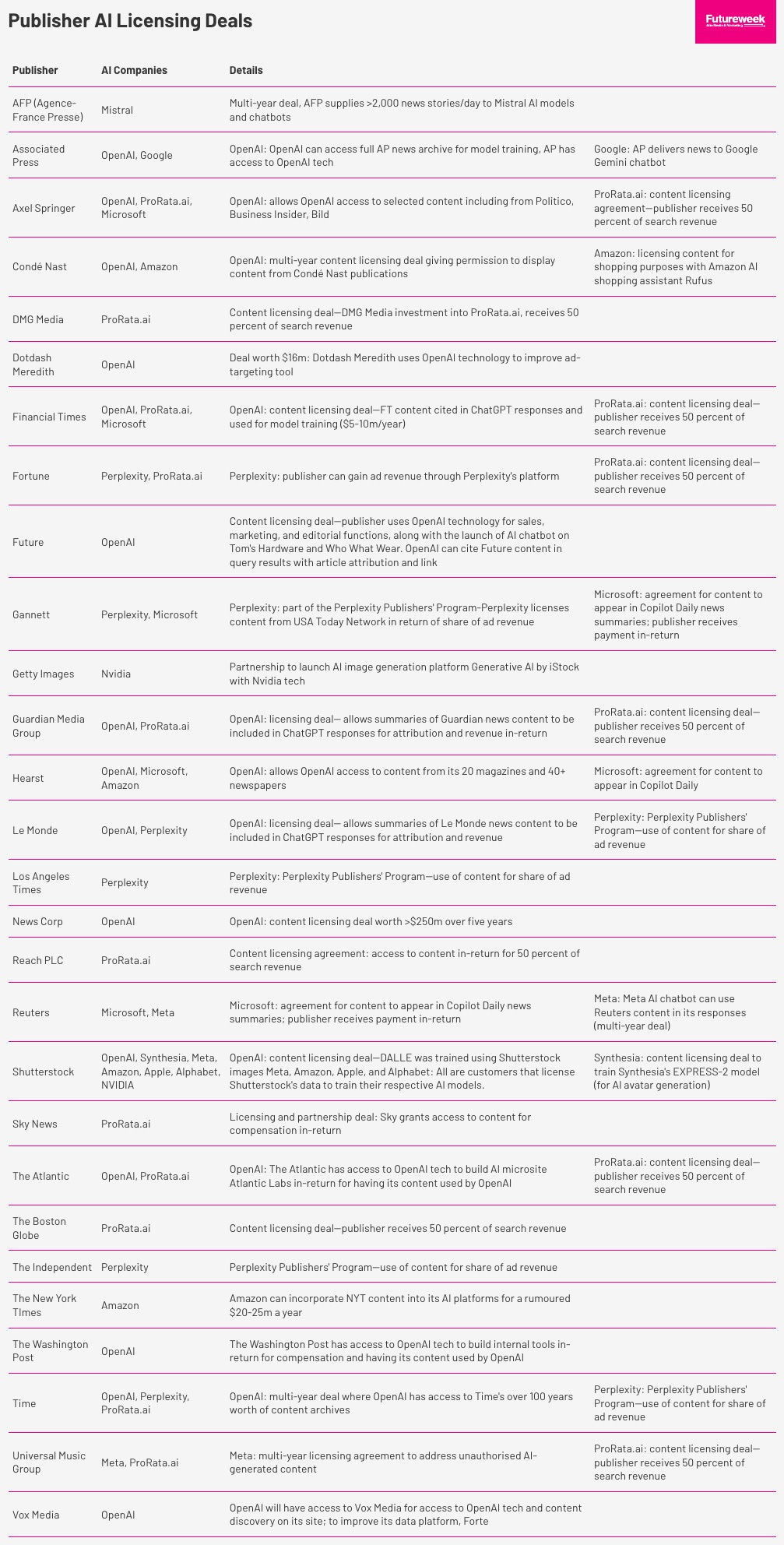

The AI hunger for data is palpable, not least shown by a steady drip of licensing deals between content publishers and AI hyperscalers.

With a lot of money going around, cash‑starved newsrooms are happily negotiating with AI firms whose models already cannibalize their traffic but now promise them a share of the AI boom and newfound social relevance.

Take a look at The Associated Press:

The news organization has spent the last nine to 12 months making its tens of millions of content assets across text, video, photos and audio formats, machine-readable for LLMs to assimilate easily. […]

“The best AI has the best inputs and generates the best outputs. This is the inverse of garbage in, garbage out,” said Roberts at the summit. “This means that in the future, we need more good quality, high quality content, not less. We’re going to transform the entire multi-trillion-dollar global economy based on AI, and AI fundamentally needs great information to be great”

Then there’s Reddit, which disclosed AI data‑licensing contracts with Google and OpenAI, selling their user content for training in exchange for boosts in AI search, immediately tripling their user base thanks to Google AI search.

Sounds like a win-win, right? Let me come to that in a second.

Before, we need to also acknowledge that AI hyperscalers are rarely so kind to ask for information, rather than pirating it by scraping the internet or worse. All AI companies are currently in legal fights over their blatant copyright infringement of information they had no legal way of obtaining, such as books or art. Anthropic recently settled one case for $1.5 billion for pirating half a million books. Peanuts for AI hyperscalers drunk on investor money and the prospect of robbing humanity blind in the future.

But this will make AI just more useful to users, so why worry about how companies get there?

This dynamic mirrors what Nick Couldry and Ulises Mejias coined data colonialism: the systematic appropriation of human life through data extraction, justified by narratives of progress and innovation. There was also a time when big tech platforms, from google search to social media platforms, felt genuinely useful and empowering to users. How did that turn out?

The Canadian writer Cory Doctorow coined the term “enshittification” to describe the lifecycle of digital platforms that initially serve users, then prioritize business customers, and finally exploit both for maximum profit.

Once users, creators, and institutions are locked in and have no real alternatives, the owners of the infrastructure can begin to systematically extract value from every layer of human activity.

Remember social media platforms that once felt like open, empowering infrastructure? It is no coincidence they quickly devolved into an extractive apparatus for mass exploitation, with all the negative social and political consequences for society we have to grapple with today.

The AI data colonialism currently happening is even more worrisome. The common goods of our digital lives—language, images, art, stories, code—are either mined, bought or simply stolen to produce systems that we then must rent access to through corporate APIs and subscriptions. Every prompt becomes a micro-transaction in a planetary rent economy, and everybody will pay for what was once a public good.

And the social and political consequences will be almost unfathomable.

Something new: owning the interface to reality

Historically, media theorists have warned that the medium shapes the message. Today, it is more like: Control the interface, control the story.

As AI systems are installed as the default lens on the web, they act as new gatekeepers that will arbitrate which facts surface and which fall through the cracks. How thoroughly vulnerable this approach is to the whims of billionaire oligarchs that own the AI systems will surprise exactly nobody who just paid a glimpsing look at Elon Musk’s Grokipedia.

“Shortly after launch, several sources described articles as promoting right-wing perspectives, conspiracy theories, and Elon Musk’s personal views. Other criticism of Grokipedia focused on its accuracy and biases due to AI hallucinations and potential algorithmic bias.”

-Wikipedia (which is actually a community-based model that has proven invaluable to the world)

This is a subtler, more pervasive form of any previous censorship or propaganda system because it operates under the guise of assistance and personalization while selling a very specific and narrow ideological worldview.

The more these captured AI models mediate our access to information, summarizing search results, generating news reports, drafting our communications, the less we experience the raw plurality of perspectives that once defined the open web.

Gatekeepers, censorship and propaganda are of course only the surface of how bad things can get.

Because AI is currently also deployed to create an unmeasurable amount of slop, deep fakes, fan fictions and disinformation that fundamentally will pollute and destroy the information ecosystem as we know it and make it basically unusable for human agents. Leading the pack is of course X.

“We’re trending quickly toward an internet that could be 99.9% AI-generated content, where agents and bots outnumber humans not just in traffic but in creative output. AI agents chat with each other on forums, generate news articles, create thought leadership, engage on social media, leave product reviews, spark controversy, and post memes. Each bot believes it’s talking to a human. Each human statistically is engaging with a bot. Every interaction seems plausible, even engaging. But it’s all synthetic.”

- Benjamin Wald, Galaxy

When the signal to noise ratio of any true information is one in a million within an ocean of related, personalized and microtargeted lies that are optimized to be emotionally compelling, what confidence can we ever have in any information we consume?

Our current chaotic and broken information ecosystems already breed epistemic nihilism, where citizens believe that nothing is ever really true except for what feels right and convenient in the moment.

It is no surprise so many people turn to AI and treat them as answering machines, oracles or all-knowing gods over fact-checking and verifying sources themselves. That cognitive dependency on AI models and agents as navigation tools will only get more profound as time progresses.

Do I really have to point out the power differential when few entities will own and control the AI gatekeepers that generate the bulk of information flows at a time when billions depend on AI for cognitive navigation? Market monopoly would instill a much more powerful epistemic monopoly: control over the conditions of knowing.

Linguist have already noticed that AI “externalized thinking so completely that it makes us all equal” in our use of language. It decreases democratic plurality in the worst way possible; by boiling our human diversity of thought and expression down into a homogeneous language slop.

Once users internalize the AI model’s outputs as truth, it will not only homogenize how we speak but how we reason and see the world. I for one would put that power under as much democratic accountability as possible.

Leaving AI infrastructure to tech moguls will enshrine a trillionaire-friendly 21st century mix of corpo-Orwellian “goodspeak” and manufactured consent.

Or much worse… but I do not want to give them any more ideas.

So let’s conclude our current state of affairs:

Welcome to the robber‑mogul playbook

All this leads me to an unpleasant but not unexpected conclusion:

Today’s AI arms race is best understood as a calculated corporate power grap: spend unprecedented sums on compute, secure strategic infrastructure along a vertical tech stack, use your investor billions to manipulate, muscle or coerce a critical mass of users and industry into rapid adoption of your stack despite productivity and expertise losses, security risks and environmental destruction, and let the narrative fog of “AGI” anxieties, geopolitics and empty promises cover up the construction of your death star rentier empire.

Unfortunately, the AI infrastructure boom for these tech moguls isn’t about reaching AGI; winning against China, or unlocking productivity utopia and abundance for humanity. As if any of these narcissistic wannabe sun-king egos would ever spare ambition for anybody but themselves. They play into these narratives with their appearances, press releases and pocketbooks to keep the media and the population occupied to look somewhere else.

But best I can tell, the current evidence suggests that the AI race is mostly about an older, more mundane, imperialistic impulse from centuries past that vast wealth inequality has always engendered: A lust for power. Dominance over the means of human affairs, from collaboration to production, enshrining their position over society for generations to come.

It’s a feudalism reboot attempt for the information age.

If the tech moguls win their gambit, if they succeed at infrastructure capture, technological lock-in and public dispossession of our shared information ecosystem, we will have ceded more than we have bargained for. Once control over knowledge creation, distribution and consumption (and with it increasingly cognitive agency) is lost for most of us, what awaits is a new dark age of monarchs, myths, manipulation and magical thinking.

A handful will own, a tiny minority will benefit, and the rest of us will fall into exploitation, exclusion or serfdom.

How to stop feudal entrenchment

What would a non‑feudal alternative require?

It is a question that many people are currently working on in the EU and elsewhere. One of them is Robin Berjon, a seasoned technologist and governance expert whose career spans web standards, open protocols, data governance in media, and next-generation infrastructure like IPFS. He works on figuring out how digital systems can be governed in the public interest. He was also very kind and charitable to sit down for a chat with me and outline solutions.

“We really have to break this myth that monopolies were made by innovation, and that they could be broken by innovation.”

- Robin Berjon

He emphasized that the first imperative is to treat digital and AI infrastructure as public-interest infrastructure rather than private consumer products. That means regulation, not innovation, is needed. Historical lessons from railroads and telecom monopolies show that when essential systems are privately captured, democratic oversight collapses. As a strong proponent of digital sovereignty, Robin was very clear about the stakes:

“We have to treat this like a war effort... what you’re fighting for is the right to set your own rules in your own country.”

- Robin Berjon

While my investigation into this topic is still very much ongoing, I already learned from him and others that there are good reasons for hope.

The current AI revolution presents not only a threat, but also an opportunity, to restore the foundations of democratic society in the digital age. If we, the people, aim to seize it now.

For example, there are already some practical solutions, often with proven efficacy at smaller scales or throughout history, already on offer.

So here is what we (and our representatives) should work on:

Establish pre-emptive regulatory guardrails today like the EU AI Act’s transparency and systemic-risk obligations, combined with antitrust enforcement to curb exclusive cloud-model tie-ups and break vertical control by tech cartels.

Enshrine capabilities-centric infrastructure neutrality for things like identity verification, safety or content moderation, ensuring plural applications can compete with each other without having to recreate their own capabilities to fend off bots, spam, scams or cyber attacks that any digital endeavor suffers from

Demand open protocols, federated architectures and interoperability standards (similar to ActivityPub [Bluesky] or Beckn) to reduce dependency on single platforms, prevent lock-in and empower citizens by transferring data ownership back to users.

Governmental and public adoption incentives of pro-democracy communication infrastructures. Habermasian democracy depends on institutionalizing norms of discourse. Adoption incentives ensure these norms are embedded in digital spaces rather than leaving them to market forces.

Foster institutional density: AI should be regulated by a dense web of local institutions and governance models that reflect the diversity of communities and use cases. Start by building governance frameworks locally, experiment and pressure test, whatever works can then be shared or scaled horizontally to other domains.

“If you want democracy, it has to look like a hairball. Democracy is messy, but that’s what makes it resilient.”

- Robin Berjon

Allow for epistemic pluralism by encouraging many models with diverse priors; resist interface monopolies. Information has a powerful impact on our identity and worldview. Grant individuals the right to control the mechanisms and interfaces that filter their perception

In short, we have to understand and reclaim digital infrastructure as a public good for all of society.

That is my key message for you today; and wildly at odds with current AI developments, media narratives and public attention.

Let’s change that, together.

"This article is a first essay out of my larger research focus on building participatory democracy in the digital age: how to counteract the global authoritarian movement. Given recent AI news and a sense of urgency, I wanted to share one idea that maybe is worth a discussion today."

I agree with your sense of urgency. As a software engineer I am thinking a lot about the technological side of this.

Fascinating. Your point about the 'Nvidia-state' being a precariously placed load-bearing piece of the global economy is realy sharp. How do you see that specific vulnerability playing into the larger authoritarian challenges you're researching?